You are viewing the article What is robots.txt file? Details of 3 simple ways to create robots.txt file for WordPress at Lassho.edu.vn you can quickly access the necessary information in the table of contents of the article below.

Robots.txt is a website file that helps search engine crawlers. You can update the file using your PC or laptop . Refer to how to create robots.txt file for WordPress of lassho.edu.vn in the following article!

What is robots.txt file?

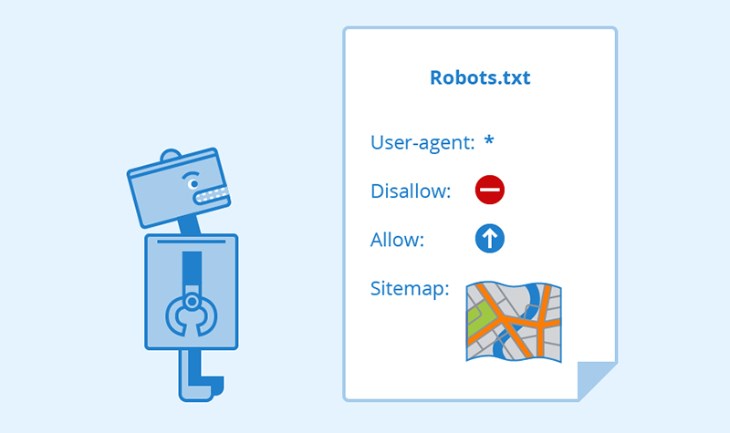

Robots.txt is a plain text file with the extension .txt . Files are part of Robots Exclusion Protocol (REP) , which regulates how Web Robots (or Robots of search engines) crawl the web, access, index content, and make it available to users. .

Robots.txt is part of Robots Exclusion Protocol

Robots.txt . file syntax

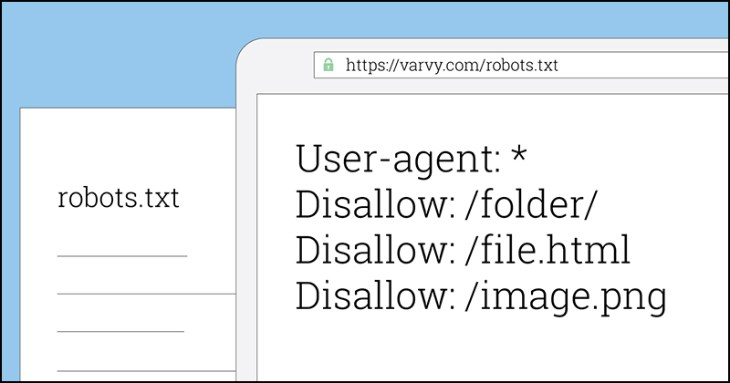

Robots.txt has special syntaxes that are considered native languages including:

- User-agent : This is the name of the web crawlers that access web data (e.g. Googlebot, Bingbot, …).

- Disallow : Used to notify User-agents not to collect any specific URL data. Only one Disallow line can be used per URL.

- Allow (Googlebot search engine only) : The command tells Googlebot that it can access a page or subfolder, even though the pages or subfolders may not be allowed.

- Crawl-delay : Tells Web crawlers how long to wait before loading and crawling the page’s content. Note, however, that the Googlebot search engine does not accept this command and you must set the crawl rate in Google Search Console.

- Sitemap : Used to provide the locations of any XML Sitemap associated with this URL. Note that only Google, Ask, Bing and Yahoo search engines support this command.

The robots.txt file includes many different syntaxes

Create robots.txt file for what?

Robots.txt is a file placed on a web server to instruct search engine robots on how to visit a web page . Creating a robots.txt file can help improve your site’s search and ranking by search engines.

The robots.txt file allows you to specify the parts of your website that search engine robots are allowed or prohibited to access. For example, if you have a website that contains login pages or sensitive data, you can use robots.txt to prevent search engine robots from accessing these pages.

Creating a robots.txt file is not mandatory, however, it is one of the best ways to help search engines better understand your site and improve its search results display.

The robots.txt file helps search engines better understand your Website

How robots.txt . file works

The process of using the robots.txt file to control the crawling of search engines goes like this:

- Step 1 : Search engines will use crawling (scraping/analyzing) methods to collect data from different web pages by tracking links. This crawling process is also known as “Spidering” and it helps to discover the content of the website.

- Step 2 : After crawling the data, the search engine will index that content to answer the user’s search requests. The robots.txt file is used to provide information about how search engines crawl the website. By using the robots.txt file, bots can be instructed to crawl correctly and efficiently.

Search engine bots will crawl to crawl

Where is the robots.txt file located on the website?

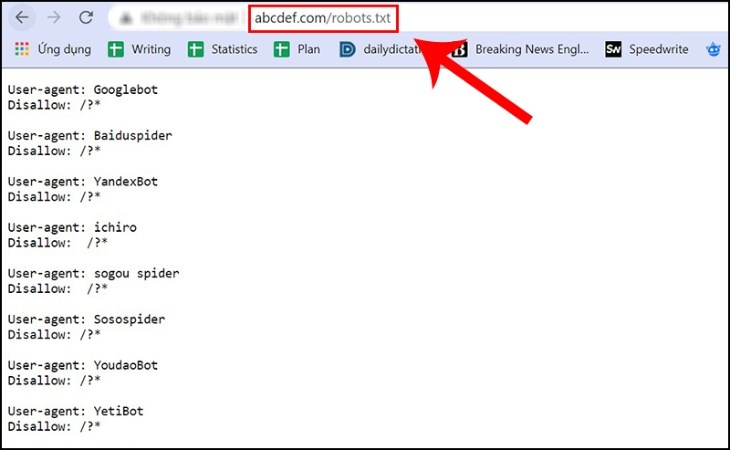

When creating a WordPress site, the system automatically generates a robots.txt file and places it in the root directory of the server. For example, if your site is located at abcdef.com, you can access the robots.txt file at abcdef.com/robots.txt.

The robots.txt file will include rules to specify how search engines should access your site. Normally, this file will prohibit bots from accessing important folders like wp-admin or wp-includes .

Specifically, the “User-agent: *” rule is applied to all types of bots on the site, and “Disallow: /wp-admin/” and “Disallow: /wp-includes/” indicate bots are not allowed. access these two directories.

The robots.txt file is located in the root directory

Check website has robots.txt file or not

To confirm the existence of the robots.txt file on your website, you can follow these steps:

- Step 1 : Enter the Root Domain of the website in the address bar of your browser (eg abcdef.com).

- Step 2 : Insert /robots.txt at the end of the address (eg abcdef.com/robots.txt).

- Step 3 : Press Enter to access the robots.txt file of the website.

If the website has a robots.txt file, you will see the contents of the file displayed in the browser. Otherwise, if this file is not present, you will receive an error message from the browser.

Structure of checking the website’s robots.txt file

What rules should be added to the WordPress robots.txt file?

The robots.txt file in WordPress usually only handles one rule at a time. However, if you want to apply different rules to different bots, you can add each set of rules in the User-agent declaration for each bot. For example, to create one rule that applies to all bots and another that applies only to Bingbot, you could use the following syntax:

After that, all Bingbots will be blocked from accessing /wp-admin/ but bots of other search engines will still be able to access it.

Rules in robots.txt . file

3 ways to create a WordPress robots.txt file

Method 1: Use Yoast SEO

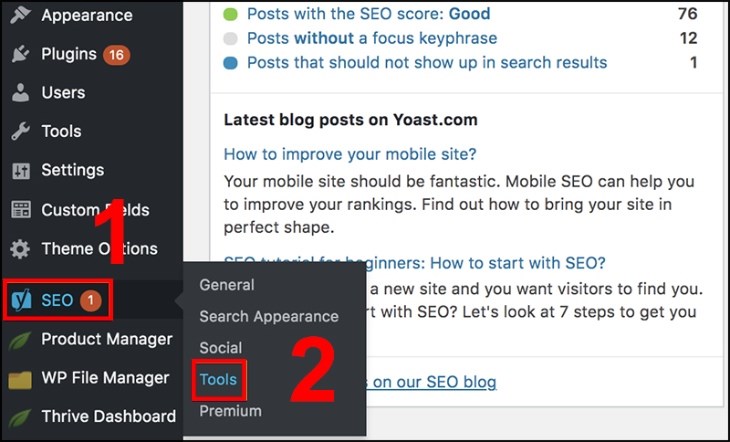

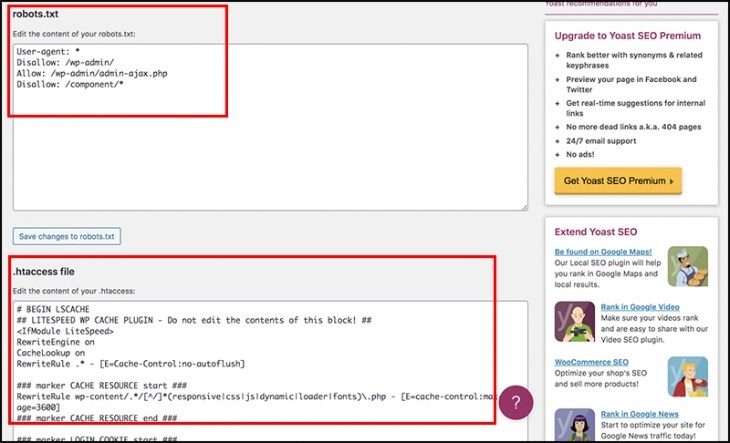

Step 1 : To start creating the robots.txt file on your WordPress site, visit the WordPress Dashboard by logging into your site. Upon successful login, you will see the WordPress Dashboard interface.

Step 2 : Please select the SEO item in the menu list on the left and then select Tools. By doing so, you will be redirected to the WordPress SEO tools management screen.

Select Tools in the SEO section of the WordPress Dashboard interface

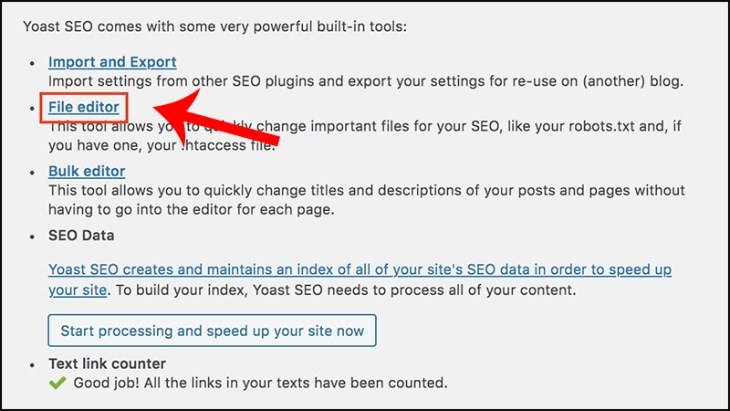

Step 3 : Select File editor to go to the page to edit SEO related files, including robots.txt file. Here, you can create, edit and save the robots.txt file for your site.

Select File editor to go to the robots.txt file editing page

You can edit the robots.txt file at the locations as shown in the image

Method 2: Through the All in One SEO Plugin set

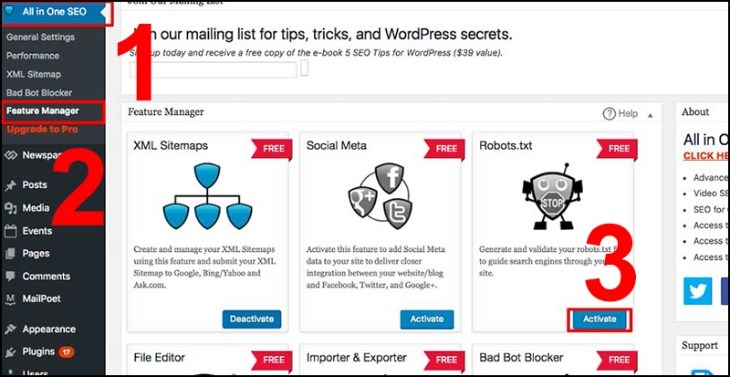

Another solution to create a robots.txt file for WordPress quickly is to use the All in One SEO plugin. This is a simple and easy to use plugin for WordPress. You can create a robots.txt file in WordPress by following these steps:

Step 1 : Access the main interface of the All in One SEO Pack plugin. If you do not have this plugin installed, download it HERE.

Step 2 : Select All in One SEO > Select Feature Manager > Click Activate for Robots.txt feature.

Select Feature Manager in All in One SEO plugin

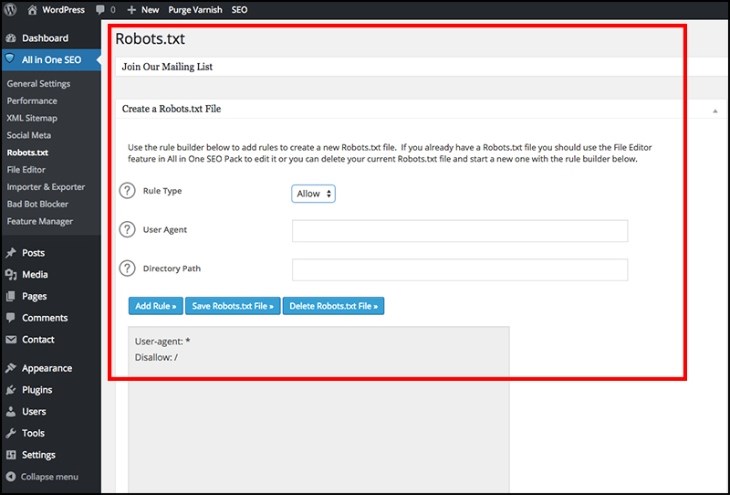

Step 3 : Create and tweak the robots.txt file for your WordPress.

Location to create and edit robots.txt . file

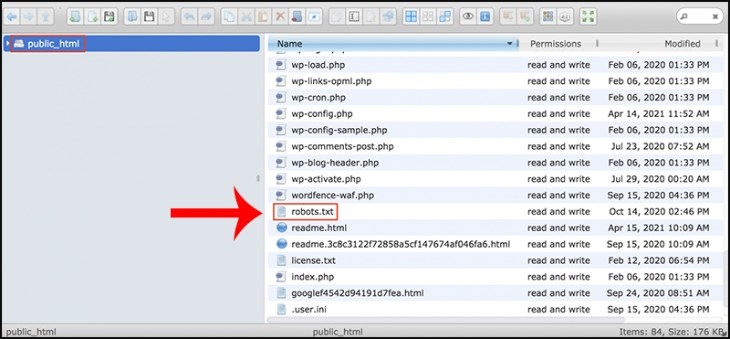

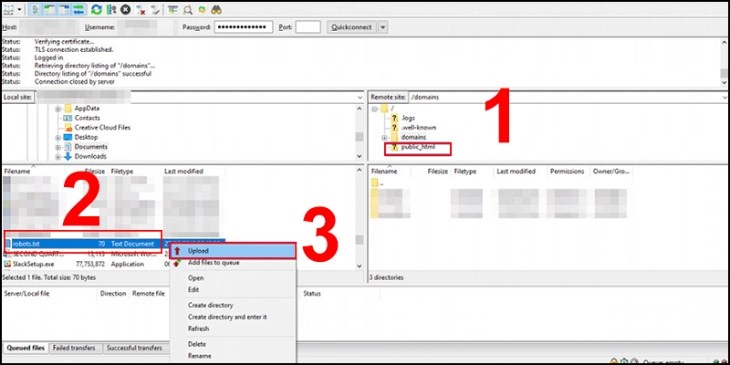

Method 3: Create and upload robots.txt file via FTP

Creating your own robots.txt file for WordPress by creating and uploading it via FTP is a simple and convenient method. You can follow these steps to do so:

- Step 1 : Use Notepad or TextEdit to create a WordPress robots.txt file template.

- Step 2 : Use FTP to access the public_html folder and find the robots.txt file.

- Step 3 : Upload the newly created robots.txt file there.

Upload robots.txt file to FTP

Some rules when creating robots.txt . file

Creating a robots.txt file for WordPress needs to follow these rules to avoid errors:

- Place the WordPress robots.txt file in the top-level directory of your site so that bots can find it.

- The filename must be formatted and case-insensitive , so name the file robots.txt instead of “Robots.txt” or “robots.TXT”.

- Disallow entries should not be used to block /wp-content/themes/ or /wp-content/plugins/ to avoid affecting the evaluation of the blog or website’s appearance.

- Some User-agents use non-standard robots.txt files to access websites, such as malicious bots or Email Scraping.

- Robots.txt files are usually public on the web, users only need to add /robots.txt to the end of the Root Domain to see the site’s directives. Therefore, this file should not be used to hide personal information .

- Each Subdomain on a Root Domain will have its own robots.txt files, which indicate the location of domain-related sitemaps at the bottom of the robots.txt file.

Each subdomain has its own robots.txt file

Some notes when using robots.txt . file

In using the robots.txt file, the following points should be noted:

- Links on pages blocked by robots.txt will not be tracked by bots unless these links have links to other pages . As a result, the linked resources may not be crawled and indexed.

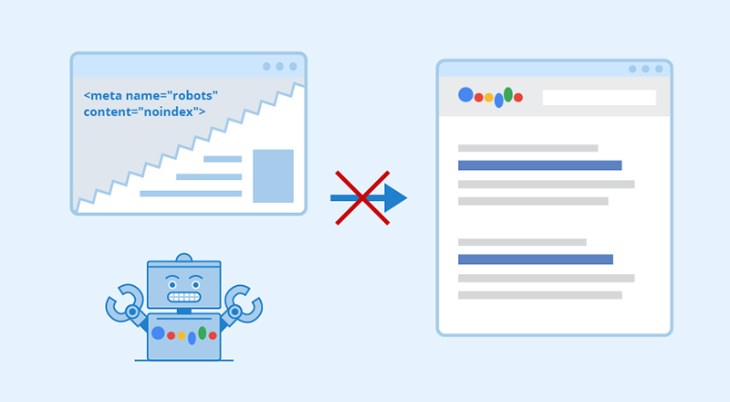

- The robots.txt file should not be used to prevent sensitive data from appearing in SERP results because bots may ignore the directives of the robots.txt file on your Root Domain or homepage.

- Most User-agents from the same tool follow a rule, so there’s no need to specify commands for each User-agent. However, doing this can still help adjust the way the website content is indexed.

- Search engines cache the content of the WordPress robots.txt file, but usually update the content in the cache at least once a day. If you want to update faster, you can use the Submit function of the robots.txt File Inspector.

- If you want to block your site from search results, use another method instead of creating a robots.txt file for WordPress, such as password protection or Noindex Meta Directive.

Noindex Meta Directive can block search engines from coming to your site

Above is an overview and how to create robots.txt file for WordPress. If you have any questions about the robots file, please leave a comment to be answered by a support expert.

Thank you for reading this post What is robots.txt file? Details of 3 simple ways to create robots.txt file for WordPress at Lassho.edu.vn You can comment, see more related articles below and hope to help you with interesting information.

Related Search: